Airflow : Airflow is a platform to programmatically author, schedule and monitor workflows. Use Airflow to author workflows as Directed Acyclic Graphs (DAGs) of tasks. The Airflow scheduler executes your tasks on an array of workers while following the specified dependencies. Rich command line utilities make performing complex surgeries on DAGs a snap. The rich user interface makes it easy to visualize pipelines running in production, monitor progress, and troubleshoot issues when needed.

DAG( Directed Acyclic Graph) : Directed Acyclic Graph is a collection of all the tasks you want to run, organized in a way that reflects their relationships and dependencies. A DAG is defined in a Python script, which represents the DAGs structure (tasks and their dependencies) as code.

Scheduler : The Airflow scheduler monitors all tasks and DAGs, then triggers the task instances once their dependencies are complete. Behind the scenes, the scheduler spins up a subprocess, which monitors and stays in sync with all DAGs in the specified DAG directory. Once per minute, by default, the scheduler collects DAG parsing results and checks whether any active tasks can be triggered.

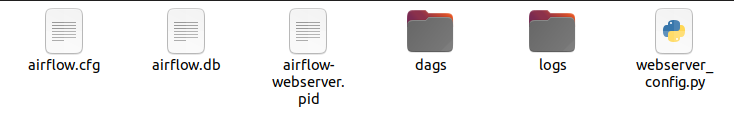

In airflow create dags folder. before get start with airflow first install it click here.

in dags create python script write below code into it.

from airflow import DAG

from airflow.operators.python import PythonOperator

from datetime import datetime

def hello_world():

print("Hello Airflow!")

with DAG(dag_id = 'hello_world1',start_date = datetime(2021.8,19),

schedule_interval='@hourly', catchup=False) as dag:

python_task1 = PythonOperator(

task_id="python_task",

python_callable = hello_world

)

python_task1

dag_id(str) – The id of the DAG must consist exclusively of alphanumeric characters, dashes, dots and underscores.

start_date(datetime) : The timestamp from which the scheduler will attempt to backfill.

schedule_interval : Defines how often that DAG runs, this timedelta object gets added to your latest task instance’s execution_date to figure out the next schedule.

catchup (bool) : Perform scheduler catchup (or only run latest)? Defaults to True.

PythonOperator : execute Python callables

In your system open 2 terminal

In terminal-1 run

airflow webserverpaste http://0.0.0.0:8080 in browser then it will show login page enter username and password. select your dag_id click on it.

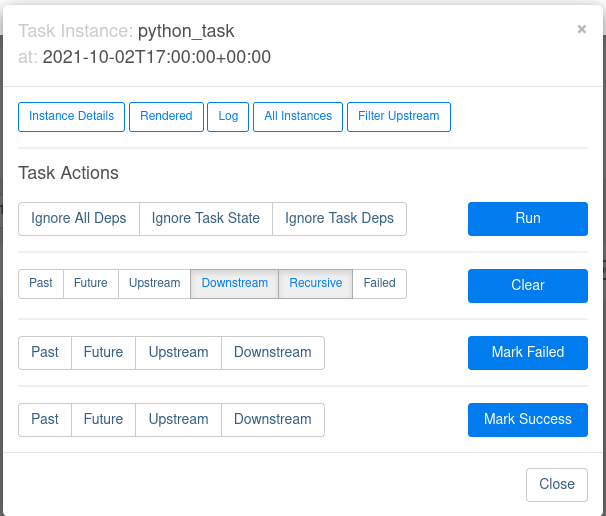

to run DAG on toggle like as above. after then click on Graph View. then you see python task click on it.

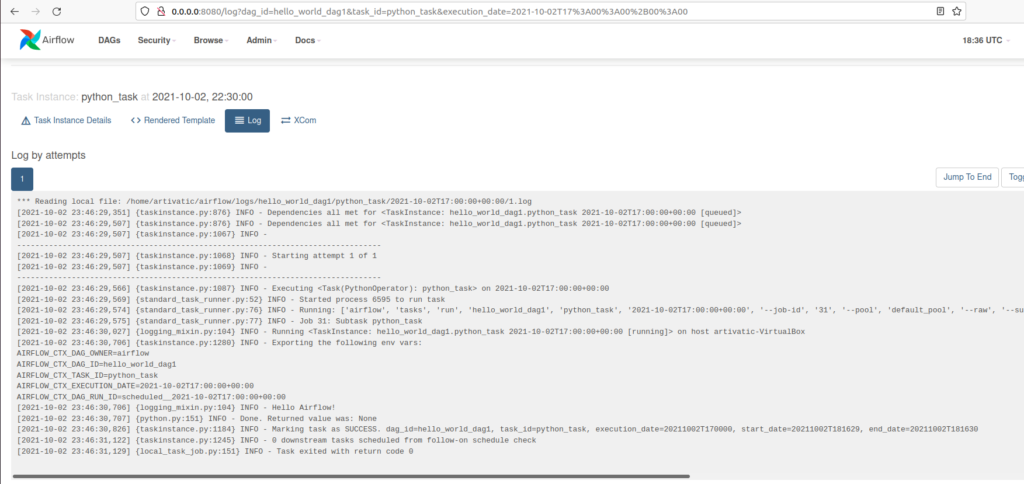

click on Log. you will see output as like below

in terminal-2 run

airflow schedulerIf you face any issue let us know in comment👍